Developer Experience and ALM considerations for a hybrid batch data pipeline using ADFv2.

This is article is part of a series:

- Architecture discussion

- ALM and Infrastructure *<- you are here

- Notable implementation details

General environment: Azure

We will implement the solution on Azure, the public cloud platform from Microsoft. All the required services can run on a free Azure account.

Tools

To provision all the resources (Data factories, storage accounts, VMs…), we will use the Azure portal (your color scheme may vary):

There we will provision one resource group per project/environment. We will also use tags to organize resources.

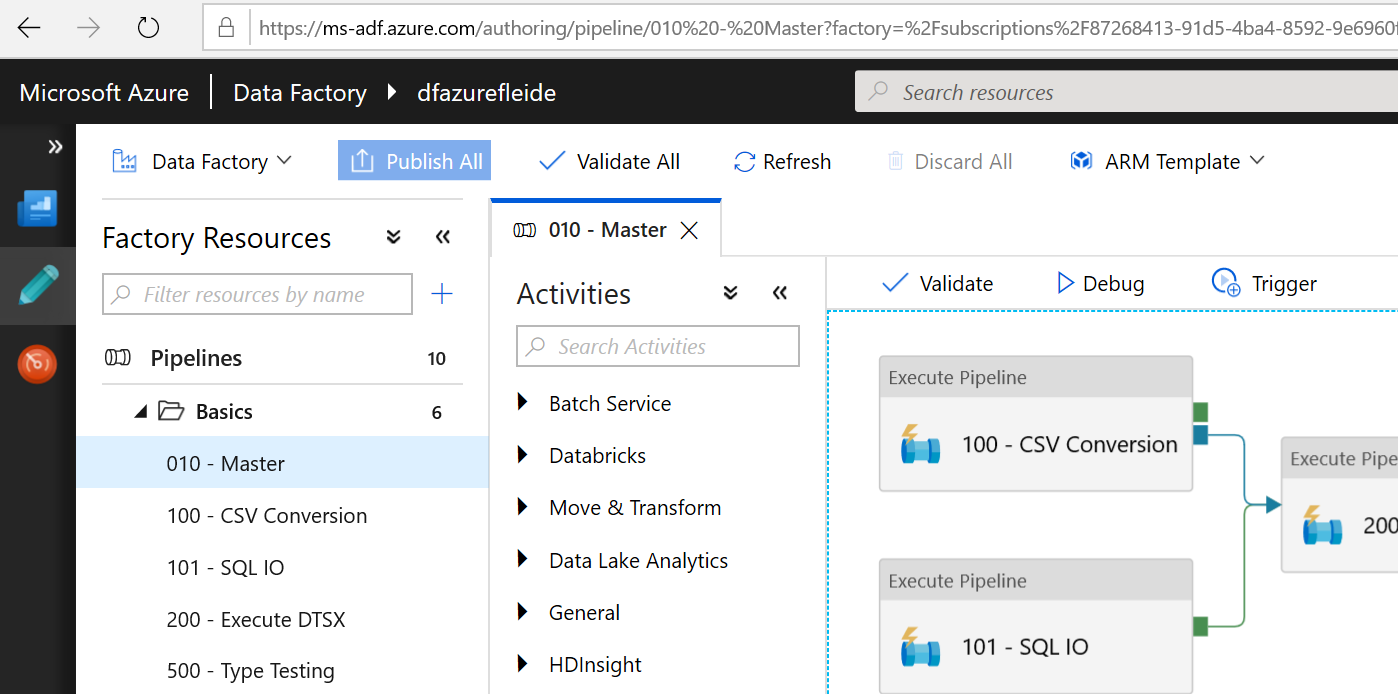

Once the factory is created, all of the fun will happen in the Data Factory portal (even debugging):

We will use Azure Storage Explorer to move files to/from blob stores and file shares. It’s a free download and works on Windows, macOS and Linux.

To develop the Logic App used for deleting files, we can either use the Azure Portal (best option to get started), Visual Studio (not really recommended at the moment) or Visual Studio Code (no visual interface).

Finally, we can leverage PowerShell (via the ISE or the Cloud Shell) and the Azure modules if things need to get scripted.

Continuous Integration and Deployment (CI/CD)

Azure DevOps (Formerly VSTS / Visual Studio Online) will be used for source control (Azure Repos) and to manage the deployment pipeline (Azure Pipeline).

Using Azure DevOps will allow us to do CI/CD directly from the Data Factory portal.

The other asset that needs attention here is the Logic App used for deletion. At the time of writing there is no obvious way to treat the Logic App as a first class citizen in terms of ALM in our project. There is no easy way to develop, version and deploy Logic App code to multiple environments with parameters (connection strings…). Logic Apps are versioned in the service, but there is no native notion of environments. That’s why in this project we will treat the Logic App as an infrastructure component, deployed once in every environment and updated rarely, putting no business logic into it.

Monitoring

During development, we will monitor ADFv2 pipeline runs visually in the Data Factory portal.

Once deployed we will also use Azure Monitor Metrics in the metrics explorer) and build dashboards with them. We’ll also define alerts. If need be, this data can be persisted using Log Analytics).

Infrastructure

Infrastructure components are the assets that are provisioned once in each environment (Development/Integration/Production), and not included in the Deployment pipeline. We won’t script any of the infrastructure provisioning in that specific project, but it can be done via ARM templates.

We won’t discuss the provisioning of the VMs, VNet and other required bits, as it should be pretty straightforward operations.

ADFv2 Assets

We will follow the CI/CD tutorial to set up the Factory itself in all 3 environments. This will result in the creation of 3 factories in the portal.

The self-hosted IR can either be shared among environments, or we’ll create one per environment if need be.

In a factory we will create the following artifacts:

- Integration Runtime

- Self-hosted IR (how-to)

- Linked Services

- sFTP connector

- File Store A, type Azure File Storage, self-hosted IR (reaching in the VNet), authentication via Password (should be Key Vault instead)

- File Store B, type Azure File Storage, auto resolve IR (Parquet conversion), authentication via Password (should be Key Vault instead)

- Blob Store, type Azure Blob Store, auto resolve IR, authentication via MSI (how-to, from)

Logic App

At the time of writing, Logic Apps can’t be defined as linked services in ADFv2. This is an issue when deploying to multiple environments, as each one will need to use its own Logic App endpoint: we can’t just hard code that endpoint value in the Web Activity using it.

We’ll address that shortcoming by using a pipeline parameter to pass the Logic App URL to the activity that needs it. That way we will be able to change the endpoint at runtime or in the CI/CD pipeline (via the parameter default value).

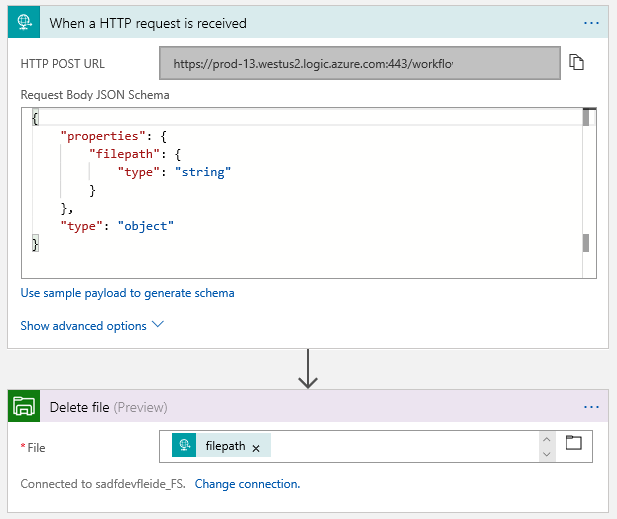

In terms of implementation of that Logic App, we will be using that strategy:

The trigger is an HTTP request, the following step is an Azure File Storage > Delete File action. We define a parameter in the Request Body (using the sample payload to generate schema option): the path to the file to be deleted. We then use that parameter as the target of the Delete File action.

To call that Logic App in a Data Factory pipeline, we will copy/paste the HTTP POST URL from the Logic App designer into a Web Activity URL. The Web Activity method will be POST, and we will build its body using an expression (generating the expected JSON syntax inline, see below).

Up next

- Architecture discussion

ALM and Infrastructure- Notable implementation details